Hadoop Introduction

Hadoop

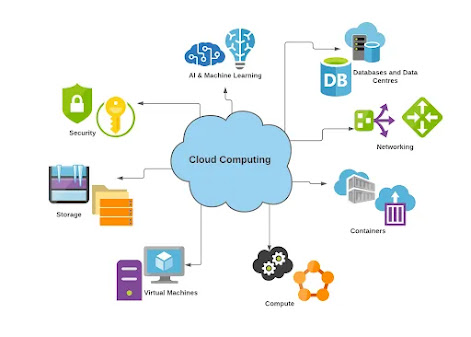

Hadoop is the popular open

source implementation of MapReduce, a powerful tool designed for deep analysis

and transformation of very large data sets. Hadoop enables you to explore

complex data, using custom analyses tailored to your information and questions.

Hadoop is the system that allows

unstructured data to be distributed across hundreds or thousands of machines

forming shared nothing clusters, and the execution of Map/Reduce routines to

run on the data in that cluster. Hadoop has its own filesystem which

replicates data to multiple nodes to ensure if one node holding data goes

down, there are at least 2 other nodes from which to retrieve that piece of information. This

protects the data availability from node failure, something which is critical

when there are many nodes in a cluster (aka RAID at a server level).

Hadoop has its origins in Apache

Nutch, an open source web searchengine, itself a part of the Lucene project.

Building a web search engine from scratch was an ambitious goal, for not only

is the software required to crawl and index websites complex to write, but it

is also a challenge to run without a dedicated operations team, since there are

so many moving parts. It's expensive too: Mike Cafarella and Doug Cutting

estimated a system supporting a 1-billion-page index would cost around half a

million dollars in hardware, with a monthly running cost of $30,000

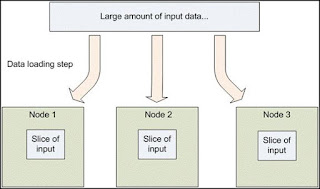

Introduction of Hadoop

In a Hadoop cluster, data is distributed to all the

nodes of the cluster as it is being loaded in. The Hadoop Distributed File

System (HDFS) will split large data files into chunks which are managed by

different nodes in the cluster. In addition to this each chunk is replicated

across several machines, so that a single machine failure does not result in

any data being unavailable. An active monitoring system then re-replicates the

data in response to system failures which can result in partial storage. Even

though the file chunks are replicated and distributed across several machines,

they form a single namespace, so their contents are universally accessible.

Data is conceptually

record-oriented in the Hadoop programming framework. Individual input files are

broken into lines or into other formats specific to the application logic. Each

process running on a node in the cluster then processes a subset of these

records. The Hadoop framework then schedules these processes in proximity to

the location of data/records using knowledge from the distributed file system.

Since files are spread across the

distributed file system as chunks, each compute process running on a node

operates on a subset of the data. Which data operated on by a node is chosen

based on its locality to the node: most data is read from the local disk

straight into the CPU, alleviating strain on network bandwidth and preventing

unnecessary network transfers. This strategy of moving computation to the data

, instead of moving the data to the computation allows Hadoop to achieve high

data locality which in turn results in high performance

HOW IT WORKS:

Hadoop limits the amount of communication which can

be performed by the processes, as each individual record is processed by a task

in isolation from one another. While this sounds like a major limitation at

first, it makes the whole framework much more reliable. Hadoop will not run

just any program and distribute it across a cluster. Programs must be written

to conform to a particular programming model, named "MapReduce."

In MapReduce, records are

processed in isolation by tasks called Mappers. The output from the Mappers is

then brought together into a second set of tasks called Reducers, where results

from different mappers can be merged together.

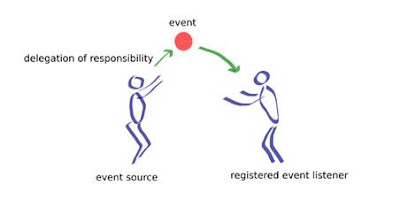

Separate nodes in a Hadoop

cluster still communicate with one another. However, in contrast to more

conventional distributed systems where application developers explicitly

marshal byte streams from node to node over sockets or through MPI buffers,

communication in Hadoop is performed implicitly. Pieces of data can be tagged

with key names which inform Hadoop how to send related bits of information to a

common destination node. Hadoop internally manages all of the data transfer and

cluster topology issues.

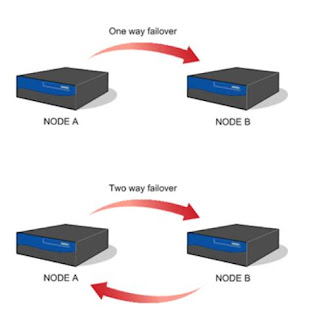

By restricting the communication between nodes,

Hadoop makes the distributed system much more reliable. Individual node

failures can be worked around by restarting tasks on other machines. Since

user-level tasks do not communicate explicitly with one another, no messages

need to be exchanged by user programs, nor do nodes need to roll back to

pre-arranged checkpoints to partially restart the computation. The other

workers continue to operate as though nothing went wrong, leaving the

challenging aspects of partially restarting the program to the underlying

Hadoop layer.

Comments

Post a Comment