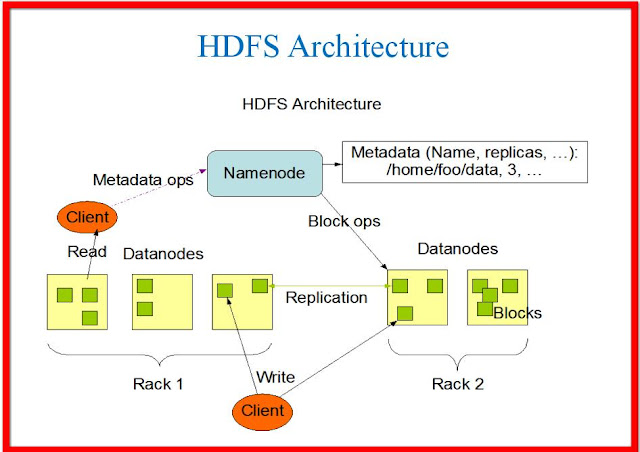

HDFS & Its Architecture

HDFS & Its Architecture HDFS - Hadoop Distributed System HDFS is a file system designed for storing very large files with streaming data access patterns running on clusters of commoditive hardware. Inspired from Google File System which was developed using C++ during 2003 by Google to enhance its search engine, Hadoop Distributed File System (HDFS), a Java based file system, becomes the core components of Hadoop. With its fault tolerant and self-healing features, HDFS enables Hadoop to harness the true capability of distributed processing techniques by turning a cluster of industry standard servers or commodity servers into massively scalable pool of storage. Just to add another feather in its cap, HDFS can store structured, semi-structured or unstructured data in any format regardless of schema and is specially designed to work in an environment where scalability and throughput is critical. HDFS Concepts Blocks A block of HDFS is 64MB, ...