Big Data Introduction

Big Data

Big data is a broad term for data

sets so large or complex that traditional data processing applications are

inadequate. Challenges include analysis, capture, data creation, search,

sharing, storage, transfer, visualization, and information privacy.

The term often refers simply to

the use of predictive analytics or other certain advanced methods to extract

value from data, and seldom to a particular size of data set. Accuracy in big

data may lead to more confident decision making. And better decisions can mean

greater operational efficiency, cost reductions and reduced risk.

Analysis of data sets can find

new correlations, to "spot business trends, prevent diseases, combat crime

and so on." Scientists, practitioners of media and advertising and

governments alike regularly meet difficulties with large data sets in areas

including Internet search, finance and business informatics. Scientists

encounter limitations in e-Science work, including meteorology, genomics,

connectomics, complex physics simulations, and biological and environmental

research.

Data sets grow in size in part

because they are increasingly being gathered by cheap and numerous

information-sensing mobile devices, aerial (remote sensing), software logs,

cameras, microphones, radio-frequency identification (RFID) readers, and

wireless sensor networks. The world's technological per-capita capacity to

store information has roughly doubled every 40 months since the 1980s; as of

2012, every day 2.5 exabytes (2.5×1018) of data were created; The challenge for

large enterprises is determining who should own big data initiatives that

straddle the entire organization.

Work with big data is necessarily

uncommon; most analysis is of "PC size" data, on a desktop PC or

notebook that can handle the available data set. Relational database management

systems and desktop statistics and visualization packages often have difficulty

handling big data. The work instead requires "massively parallel software

running on tens, hundreds, or even thousands of servers". What is

considered "big data" varies depending on the capabilities of the

users and their tools, and expanding capabilities make Big Data a moving

target. Thus, what is considered to be "Big" in one year will become

ordinary in later years. "For some organizations, facing hundreds of

gigabytes of data for the first time may trigger a need to reconsider data

management options. For others, it may take tens or hundreds of terabytes

before data size becomes a significant consideration."

Characteristics

Big data can be described by the

following characteristics:

Volume – The quantity of

data that is generated is very important in this context. It is the size of the

data which determines the value and potential of the data under consideration

and whether it can actually be considered Big Data or not. The name ‘Big Data’

itself contains a term which is related to size and hence the characteristic.

Variety - The next aspect

of Big Data is its variety. This means that the category to which Big Data

belongs to is also a very essential fact that needs to be known by the data

analysts. This helps the people, who are closely analyzing the data and are

associated with it, to effectively use the data to their advantage and thus

upholding the importance of the Big Data.

Velocity - The term

‘velocity’ in the context refers to the speed of generation of data or how fast

the data is generated and processed to meet the demands and the challenges

which lie ahead in the path of growth and development.

Variability - This is a

factor which can be a problem for those who analyse the data. This refers to

the inconsistency which can be shown by the data at times, thus hampering the

process of being able to handle and manage the data effectively.

Veracity - The quality of

the data being captured can vary greatly. Accuracy of analysis depends on the

veracity of the source data.

Complexity - Data

management can become a very complex process, especially when large volumes of

data come from multiple sources. These data need to be linked, connected and

correlated in order to be able to grasp the information that is supposed to be

conveyed by these data. This situation, is therefore, termed as the

‘complexity’ of Big Data

Architecture:

In 2004, Google published a paper

on a process called MapReduce that used such an architecture. The MapReduce

framework provides a parallel processing model and associated implementation to

process huge amounts of data. With MapReduce, queries are split and distributed

across parallel nodes and processed in parallel (the Map step). The results are

then gathered and delivered (the Reduce step). The framework was very

successful, so others wanted to replicate the algorithm. Therefore, an

implementation of the MapReduce framework was adopted by an Apache open source

project named Hadoop.

MIKE2.0 is an open approach to

information management that acknowledges the need for revisions due to big data

implications in an article titled "Big Data Solution Offering". The

methodology addresses handling big data in terms of useful permutations of data

sources, complexity in interrelationships, and difficulty in deleting (or

modifying) individual records.

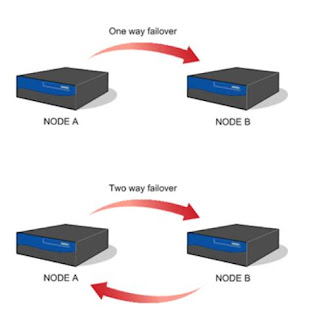

Recent studies show that the use

of a multiple layer architecture is an option for dealing with big data. The

Distributed Parallel architecture distributes data across multiple processing

units and parallel processing units provide data much faster, by improving

processing speeds. This type of architecture inserts data into a parallel DBMS,

which implements the use of MapReduce and Hadoop frameworks. This type of

framework looks to make the processing power transparent to the end user by

using a front end application server.

Big Data Analytics for

Manufacturing Applications can be based on a 5C architecture (connection, conversion,

cyber, cognition, and configuration). Big Data Lake - With the changing face of

business and IT sector, capturing and storage of data has emerged into a

sophisticated system. The big data lake allows an organization to shift its

focus from centralized control to a shared model to respond to the changing

dynamics of information management. This enables quick segregation of data into

the data lake thereby reducing the overhead time

Applications

Big data has increased the demand

of information management specialists in that Software AG, Oracle Corporation,

IBM, Microsoft, SAP, EMC, HP and Dell have spent more than $15 billion on

software firms specializing in data management and analytics. In 2010, this

industry was worth more than $100 billion and was growing at almost 10 percent

a year: about twice as fast as the software business as a whole.

Developed economies make

increasing use of data-intensive technologies. There are 4.6 billion

mobile-phone subscriptions worldwide and between 1 billion and 2 billion people

accessing the internet Between 1990 and 2005, more than 1 billion people

worldwide entered the middle class which means more and more people who gain

money will become more literate which in turn leads to information growth. The

world's effective capacity to exchange information through telecommunication

networks was 281 petabytes in 1986, 471 petabytes in 1993, 2.2 exabytes in

2000, 65 exabytes in 2007 and it is predicted that the amount of traffic flowing

over the internet will reach 667 exabytes annually by 2014. It is estimated

that one third of the globally stored information is in the form of

alphanumeric text and still image data, which is the format most useful for

most big data applications. This also shows the potential of yet unused data

(i.e. in the form of video and audio content).

While many vendors offer

off-the-shelf solutions for Big Data, experts recommend the development of

in-house solutions custom-tailored to solve the company's problem at hand if

the company has sufficient technical capabilities.

Manufacturing

Based on TCS 2013 Global Trend

Study, improvements in supply planning and product quality provide the greatest

benefit of big data for manufacturing. Big data provides an infrastructure for

transparency in manufacturing industry, which is the ability to unravel

uncertainties such as inconsistent component performance and availability.

Predictive manufacturing as an applicable approach toward near-zero downtime

and transparency requires vast amount of data and advanced prediction tools for

a systematic process of data into useful information. A conceptual framework of

predictive manufacturing begins with data acquisition where different type of

sensory data is available to acquire such as acoustics, vibration, pressure,

current, voltage and controller data. Vast amount of sensory data in addition

to historical data construct the big data in manufacturing. The generated big

data acts as the input into predictive tools and preventive strategies such as

Prognostics and Health Management (PHM)..

Cyber Physical Models:

Current PHM implementations

mostly utilize data during the actual usage while analytical algorithms can

perform more accurately when more information throughout the machine’s

lifecycle, such as system configuration, physical knowledge and working

principles, are included. There is a need to systematically integrate, manage

and analyze machinery or process data during different stages of machine life

cycle to handle data/information more efficiently and further achieve better

transparency of machine health condition for manufacturing industry.

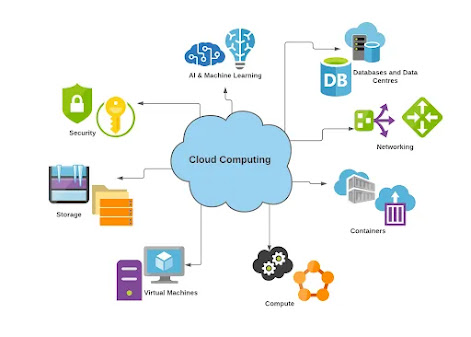

With such motivation a

cyber-physical (coupled) model scheme has been developed. Please see

http://www.imscenter.net/cyber-physical-platform The coupled model is a digital

twin of the real machine that operates in the cloud platform and simulates the

health condition with an integrated knowledge from both data driven analytical

algorithms as well as other available physical knowledge. It can also be

described as a 5S systematic approach consisting of Sensing, Storage,

Synchronization, Synthesis and Service. The coupled model first constructs a

digital image from the early design stage.

System information and physical

knowledge are logged during product design, based on which a simulation model

is built as a reference for future analysis. Initial parameters may be

statistically generalized and they can be tuned using data from testing or the

manufacturing process using parameter estimation. After which, the simulation

model can be considered as a mirrored image of the real machine, which is able

to continuously record and track machine condition during the later utilization

stage. Finally, with ubiquitous connectivity offered by cloud computing

technology, the coupled model also provides better accessibility of machine

condition for factory managers in cases where physical access to actual

equipment or machine data is limited

CONCLUSION

The availability of Big Data,

low-cost commodity hardware, and new information management and analytic

software have produced a unique moment in the history of data analysis. The

convergence of these trends means that we have the capabilities required to

analyze astonishing data sets quickly and cost-effectively for the first time

in history. These capabilities are neither theoretical nor trivial. They

represent a genuine leap forward and a clear opportunity to realize enormous

gains in terms of efficiency, productivity, revenue, and profitability. The Age

of Big Data is here, and these are truly revolutionary times if both business

and technology professionals continue to work together and deliver on the

promise.

Comments

Post a Comment